I am a fourth-year PhD candidate at Shanghai Jiao Tong University (SJTU), advised by Prof. Siheng Chen. Before that, I received my Bachelor degree from SJTU, ranked the first out of 150.

My research generally centers around AI Agents and Collaborative AI, including:

- Agentic LLMs (Reasoning, Tool, Context, etc.):

- Tongyi DeepResearch, an open-source SOTA agentic LLM with 30B size

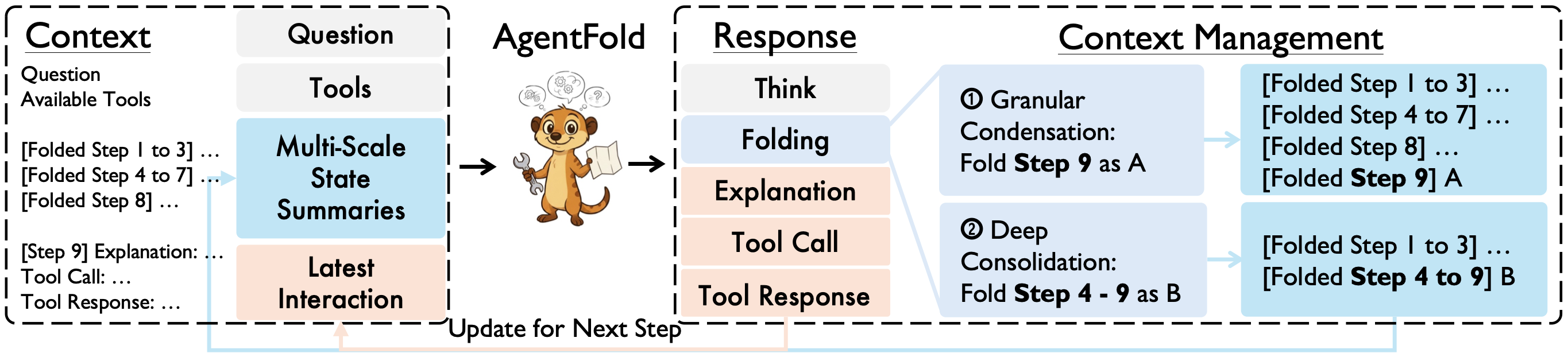

- AgentFold, a new agentic paradigm with proactive context management.

- SciMaster, a general-purpose scientific agent (achieves >30% on HLE)

- WebSailor-V2, a complete pipeline from data to training for LLM agents

- LLM-based Multi-Agent Systems (MAS, Workflow):

- Collaborative Training (Federated Learning):

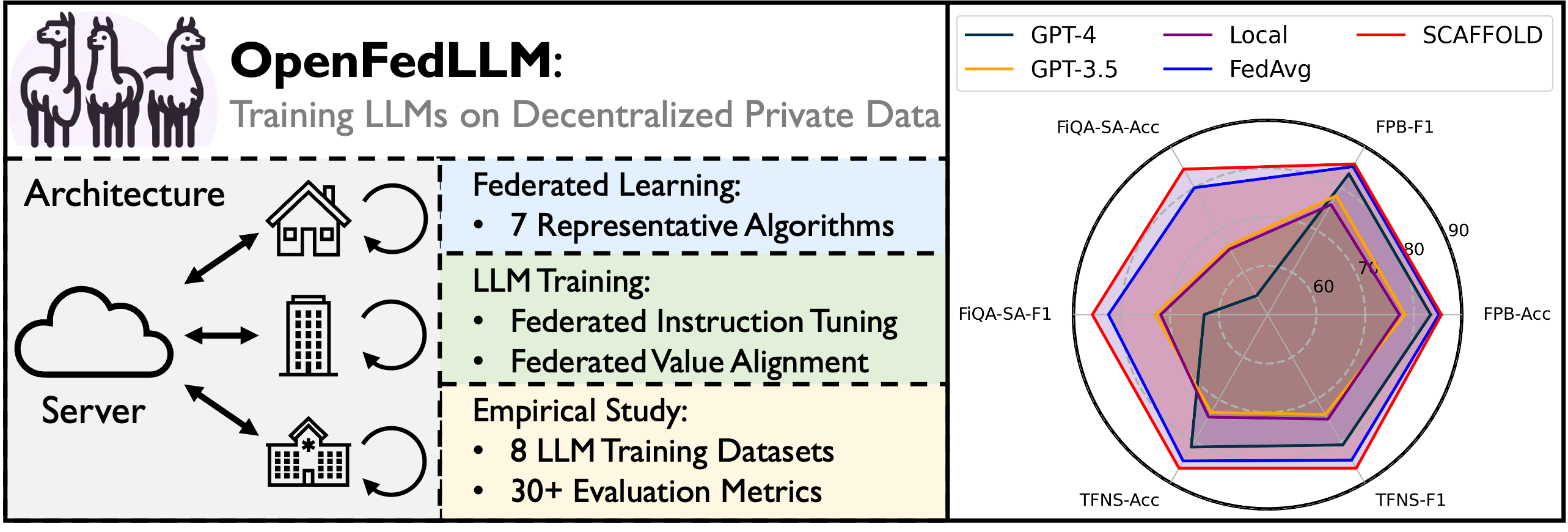

- OpenFedLLM, a research codebase for decentralized LLM training

I am also passionate about turning research into products that serve humans. For example, I have worked on:

I have gained internship experience at Microsoft Research Asia (MSRA), Shanghai AI Laboratory, and Alibaba Tongyi Lab. I am seeking opportunities as a research intern or a full-time researcher and am open to collaborations. Feel free to reach out!

Email / Google Scholar / Github / LinkedIn / Twitter

|

|

|

|

* denotes equal contribution, † denotes corresponding author, see full list in Google Scholar, some are highlighted. |

|

International Conference on Learning Representations (ICLR), 2026 ArXiv / BibTeX / ModelScope Model / Code

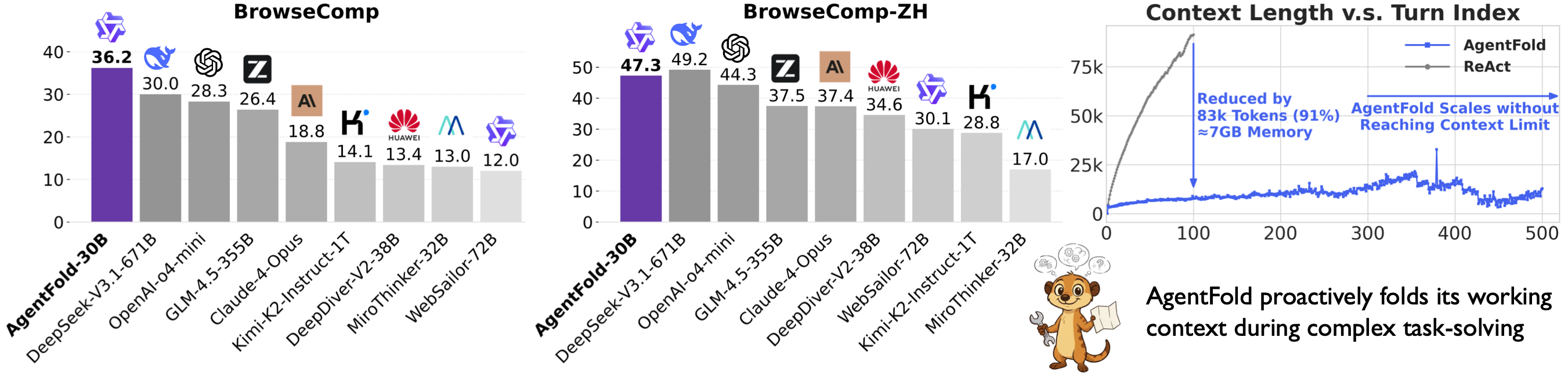

AgentFold is a new agentic paradigm with proactive context management, which automatically 'folds' several historical steps (e.g., finish one sub-task) into one summary. With simple SFT, AgentFold-30B-A3B achieves achieves 36.2% on BrowseComp and 47.3% on BrowseComp-ZH, surpassing DeepSeek-V3.1-671B-A37B and OpenAI's o4-mini. |

|

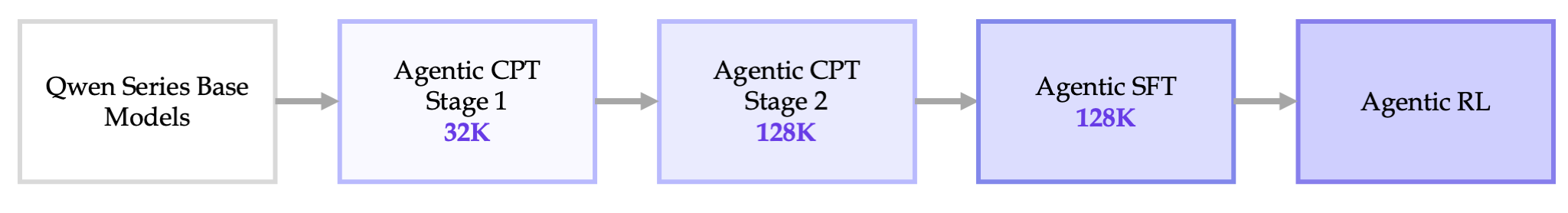

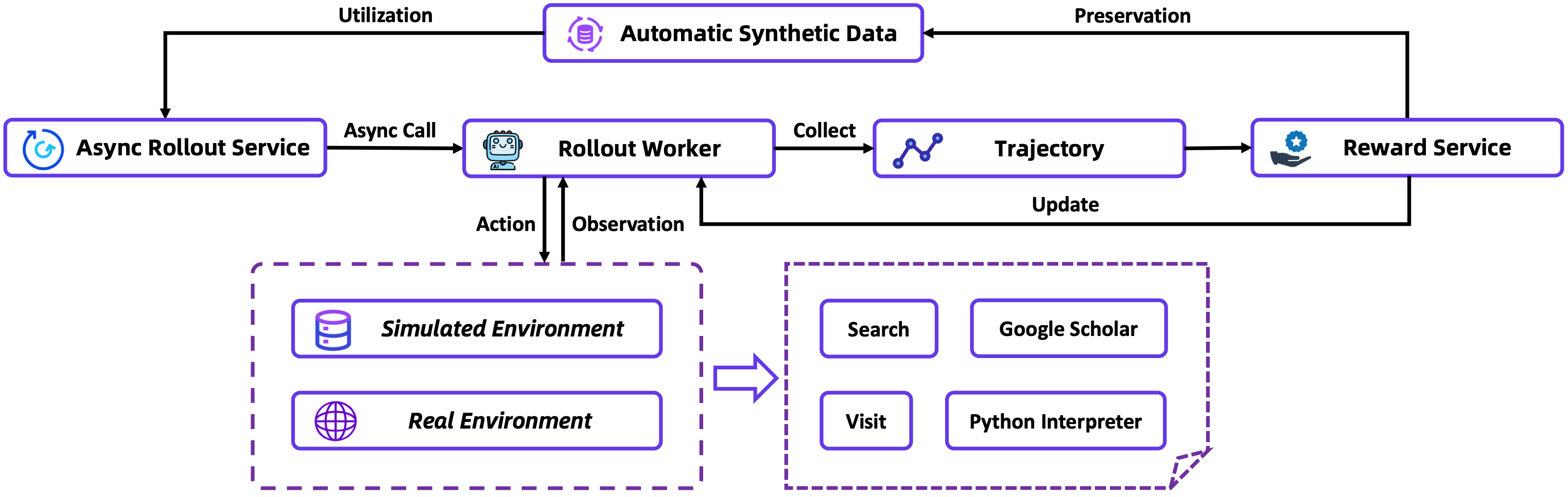

Technical Report / Blog / HF Model / MS Model / Code  / Twitter (X) / Twitter (X)

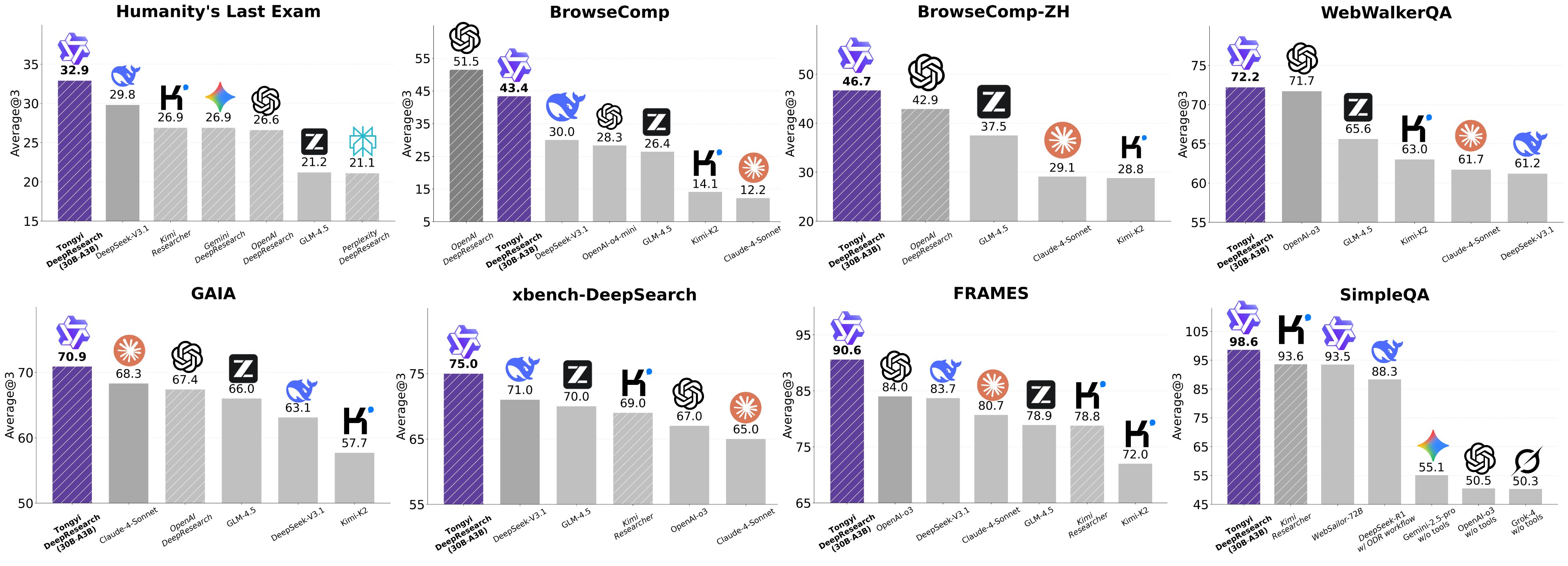

Tongyi DeepResearch is the first fully open-source Web Agent to achieve performance on par with OpenAI's Deep Research with only 30B (Activated 3B) paramters! Tongyi DeepResearch agent demonstrates state-of-the-art results, scoring 32.9 on Humanity's Last Exam, 45.3 on BrowseComp, and 75.0 on the xbench-DeepSearch benchmark. |

|

International Conference on Learning Representations (ICLR), 2026 arXiv / BibTeX / Blog / Code

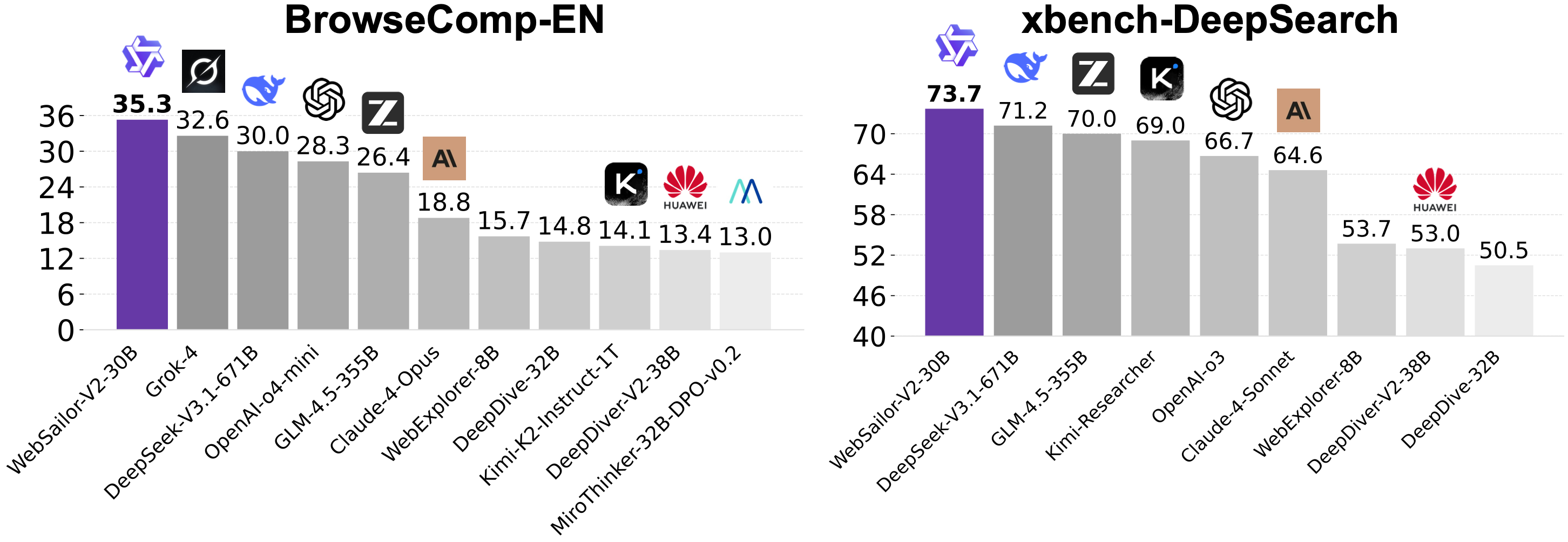

We present WebSailor-V2, a complete post-training pipeline encompassing data construction, SFT, and RL. Our 30B-A3B model achieves 35.3 on BrowseComp and 30.6 on Humanity’s Last Exam (HLE), surpassing the 671B DeepSeek-V3.1. |

|

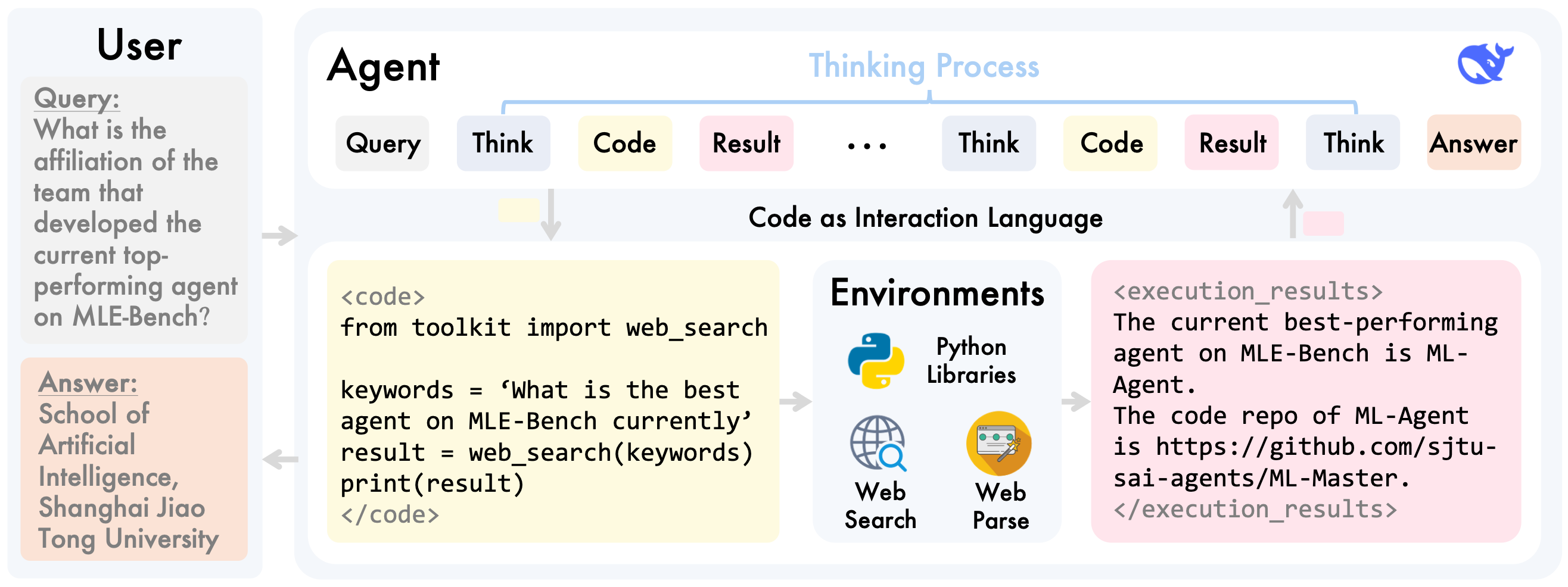

Part I. X-Master as Foundation — Can We Lead on Humanity's Last Exam? * Equal Contributions. The ordering was randomized via a dice roll. ArXiv Preprint, 2025 arXiv / BibTeX / Code  / Chat Interface / 量子位 / Chat Interface / 量子位

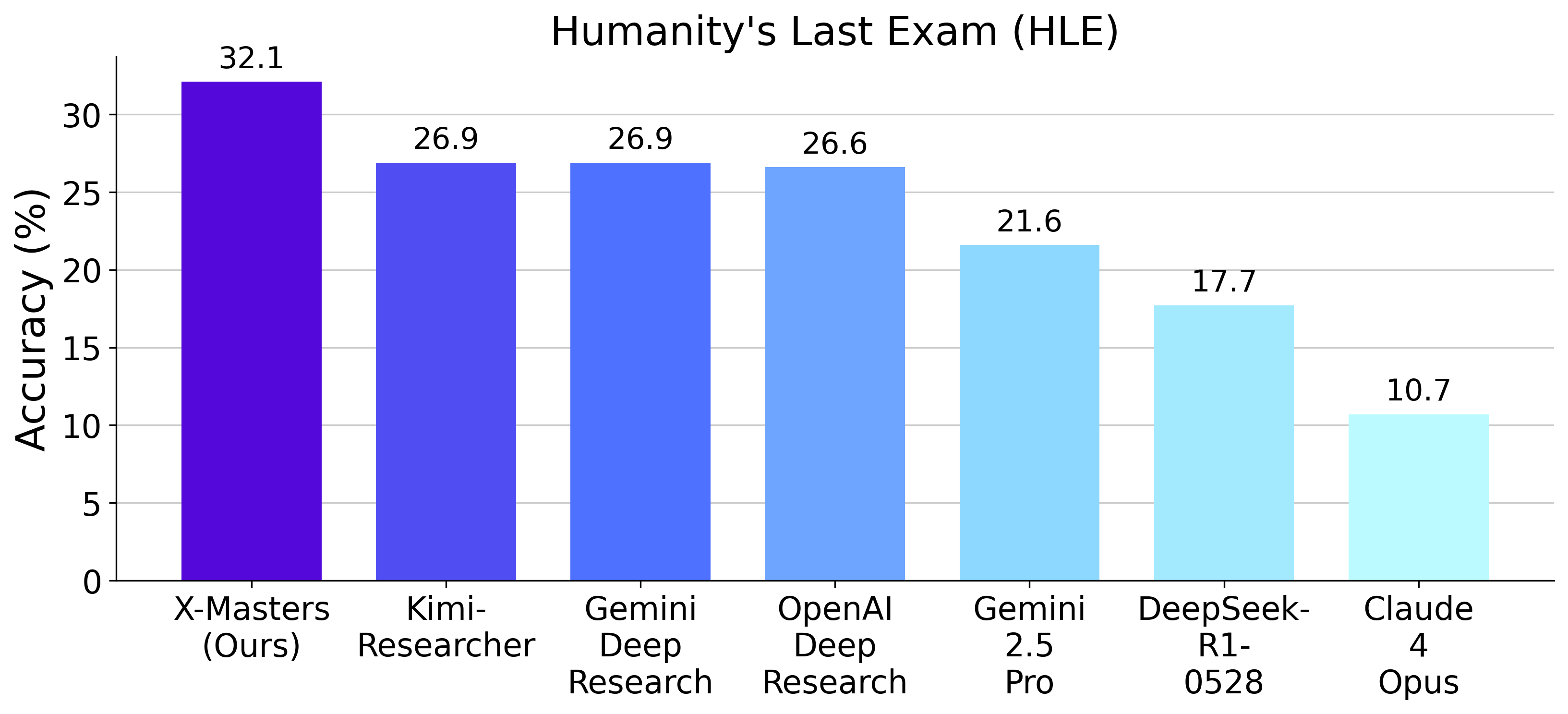

X-Masters sets a new record on Humanity's Last Exam (HLE) with a score of 32.1%, surpassing OpenAI's and Google's Deep Research (26.6% and 26.9%) and becoming the first to exceed 30%. X-Masters is an agentic workflow built upon our tool-augmented reasoning agent X-Master, designed to flexibly interact with external tools during reasoning. |

|

ArXiv Preprint, 2025 arXiv / BibTeX / Code  / 机器之心 / 机器之心

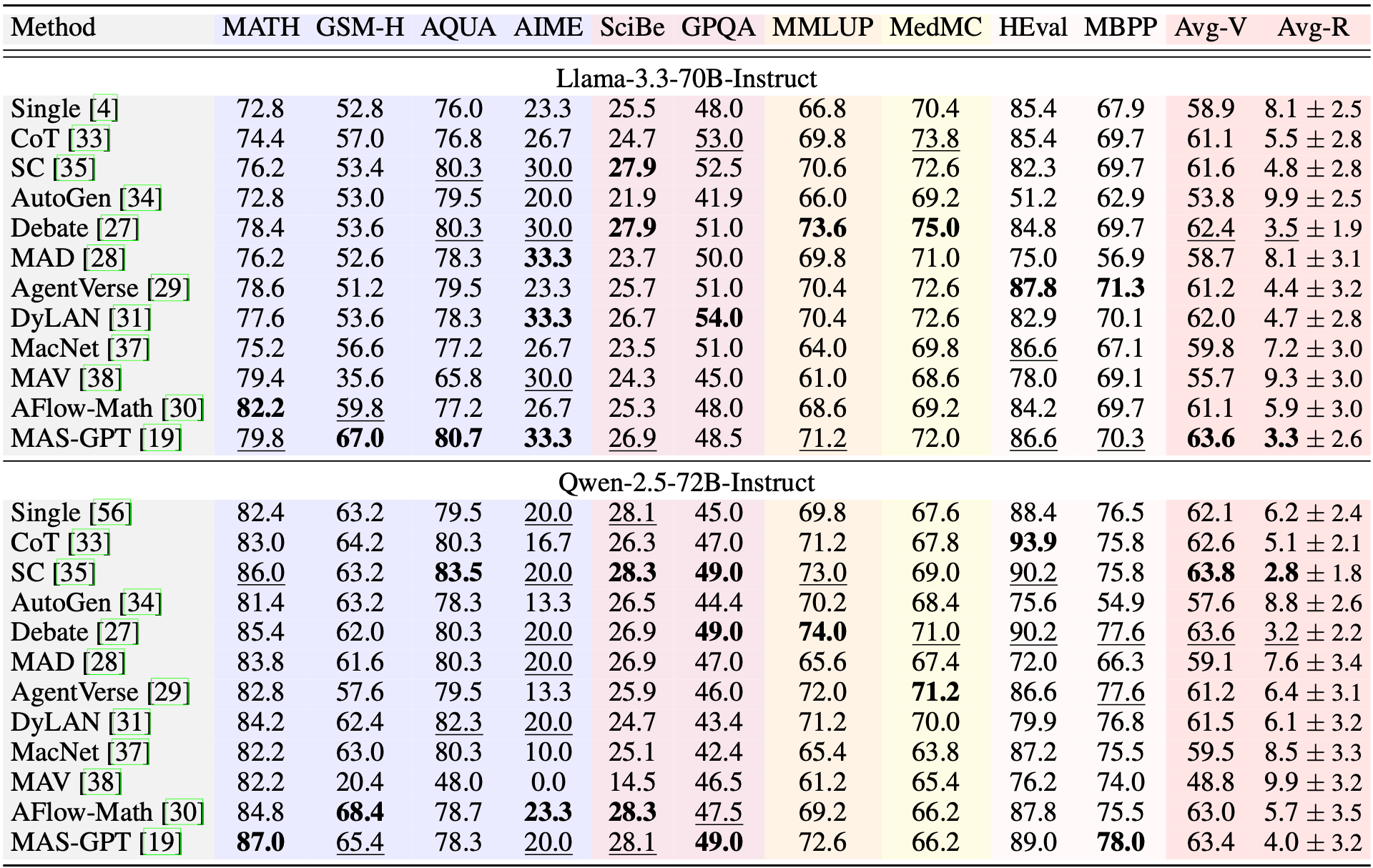

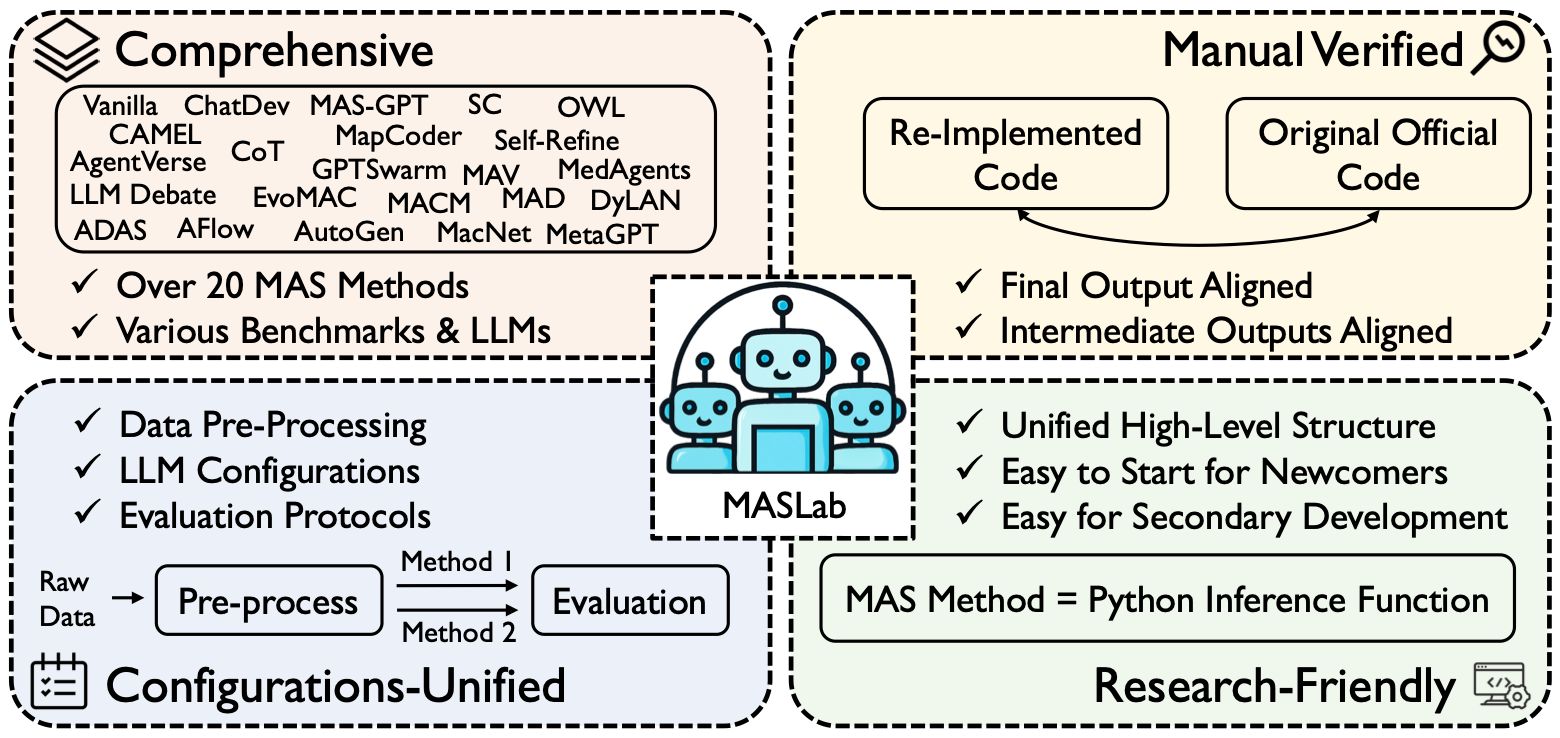

This paper introduces a unified, comprehensive, and research-friendly codebase for LLM-based multi-agent systems (MAS): MASLab. MASLab supports fairly comparing over 20 methods by unifying data pre-processing, configurations, and evaluation protocols. |

|

ArXiv Preprint, 2025 arXiv / BibTeX / Code

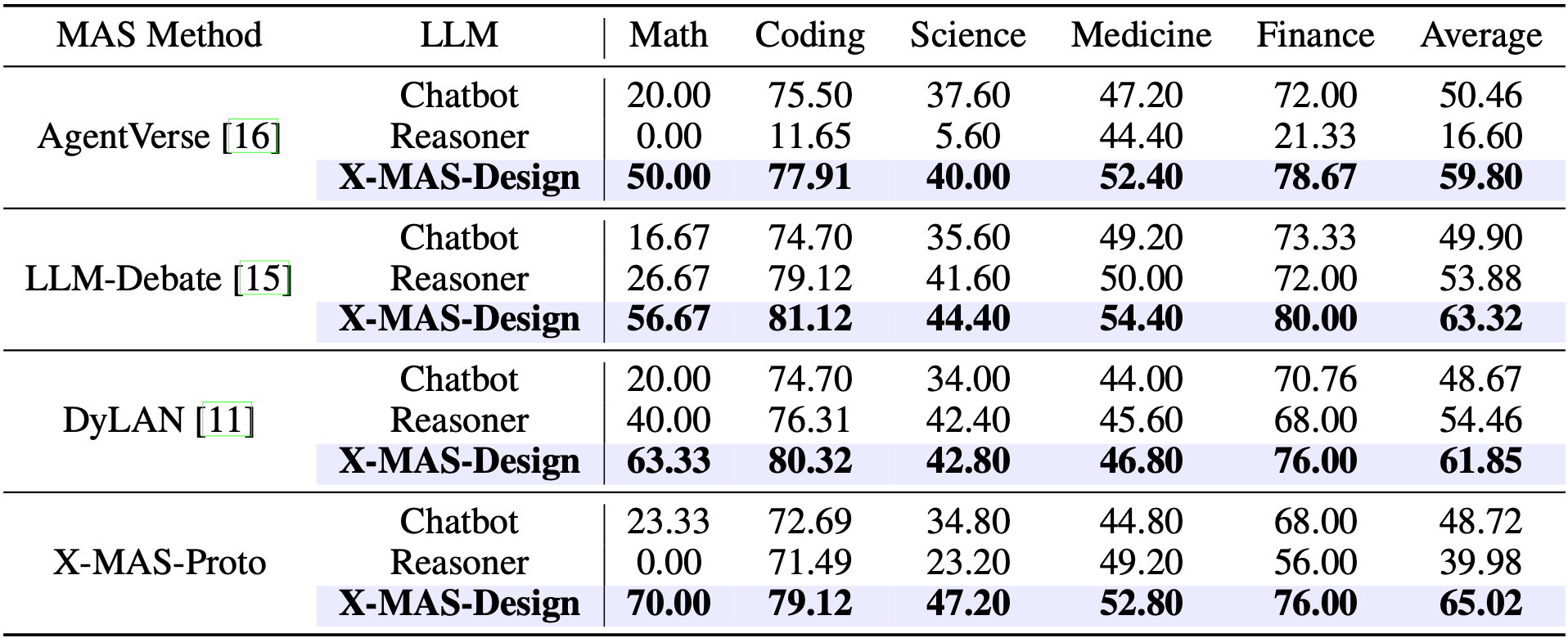

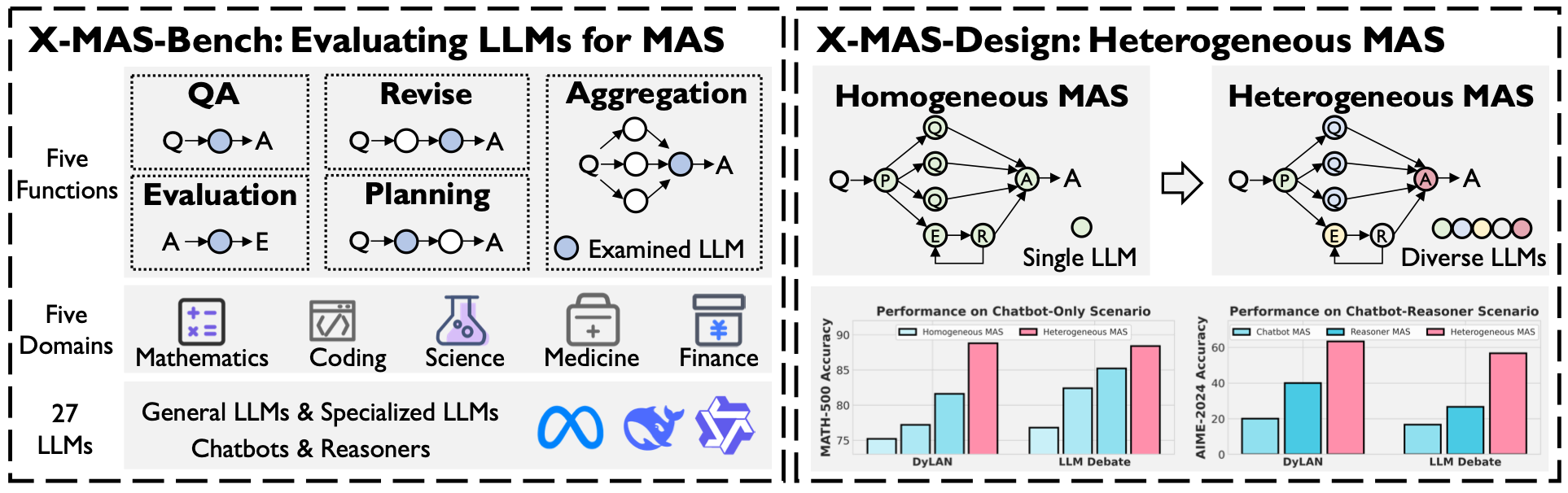

This paper advocates heterogeneous LLM-driven multi-agent systems (X-MAS). We introduce X-MAS-Bench and assess 27 LLMs across 5 MAS-related functions and domains. Based on these findings, we show that X-MAS can consistently and significantly improves the performance of homogeneous MAS (e.g., up to 47% boost on AIME). |

|

International Conference on Machine Learning (ICML), 2025 arXiv / BibTeX / Model / Code  / 机器之心 / 机器之心

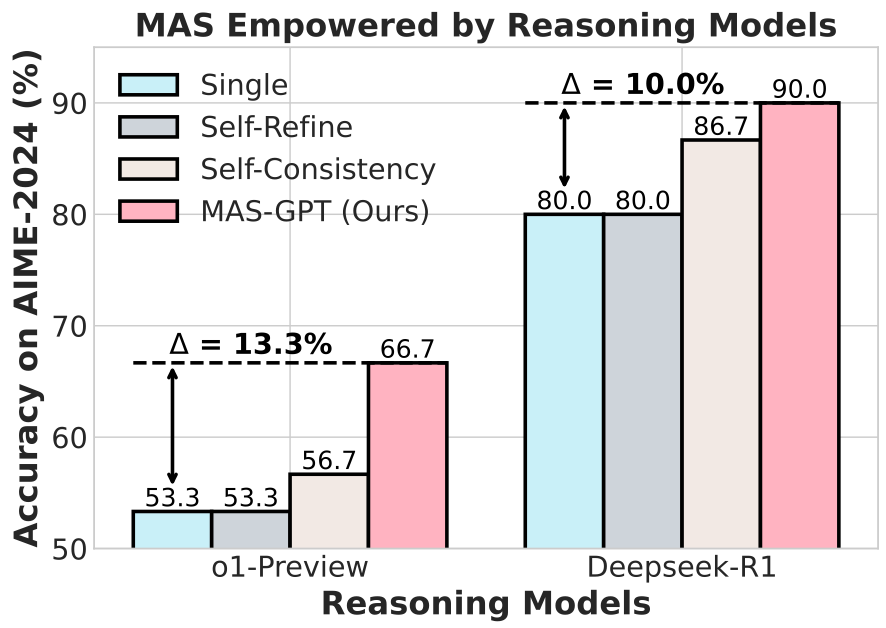

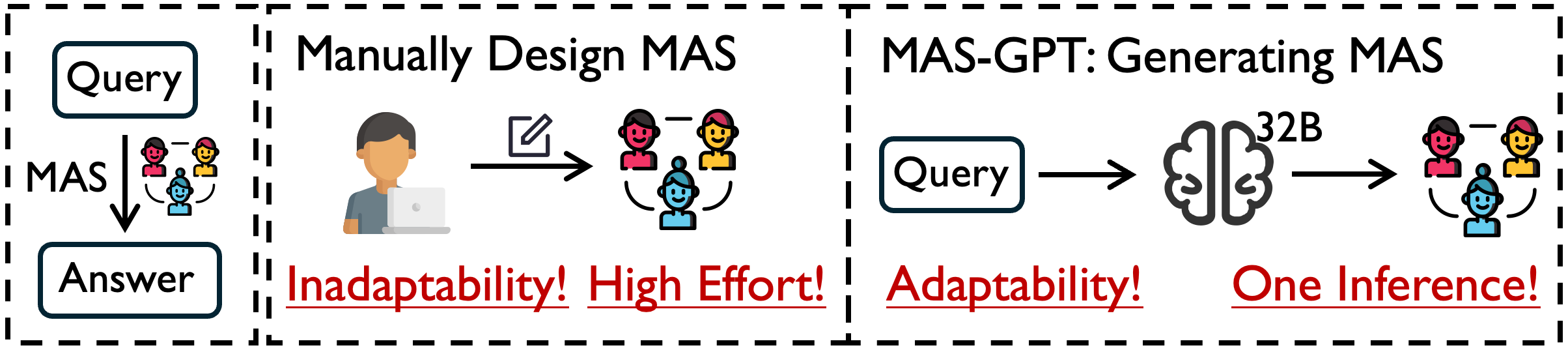

This paper proposes to formulate the process of building LLM-based multi-agent systems (MAS) as a generative task, making it as simple as querying ChatGPT. We design a dataset construction pipeline and train MAS-GPT, a 32B LLM capable of generating an executable MAS give any specific query. Results demonstrate MAS-GPT’s simplicity, cost-efficiency, and generality. |

|

Nature Communications Nature / BibTeX This paper proposes inclusive and incentivized personalized federated learning (iPFL), which incentivizes data holders with diverse purposes to collaboratively train personalized models without revealing raw data. |

|

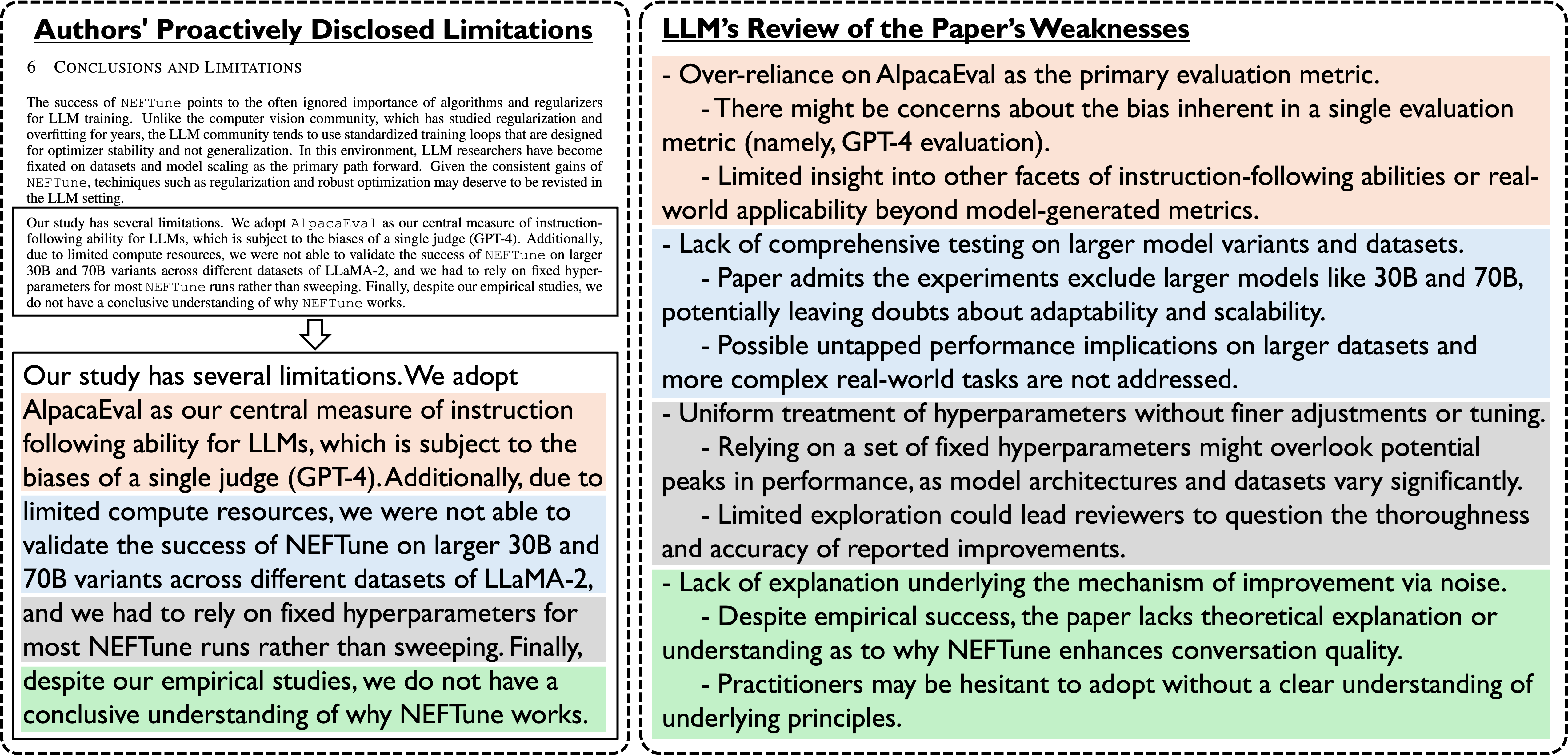

Preprint, 2024 arXiv / BibTeX / Project Page / 机器之心 (19k views) / The Washington Post Given that LLMs are being integrated into peer review, in this study, we comprehensively reveal the vulnerabilities of LLM-generated reviews by focusing on (explicit and implicit) manipulation and inherent flaws (hallucination and bias). Our findings underscore that we are not yet ready for widespread adoption, emphasizing the need for punitive measures, detection techniques, and robust safeguards. |

|

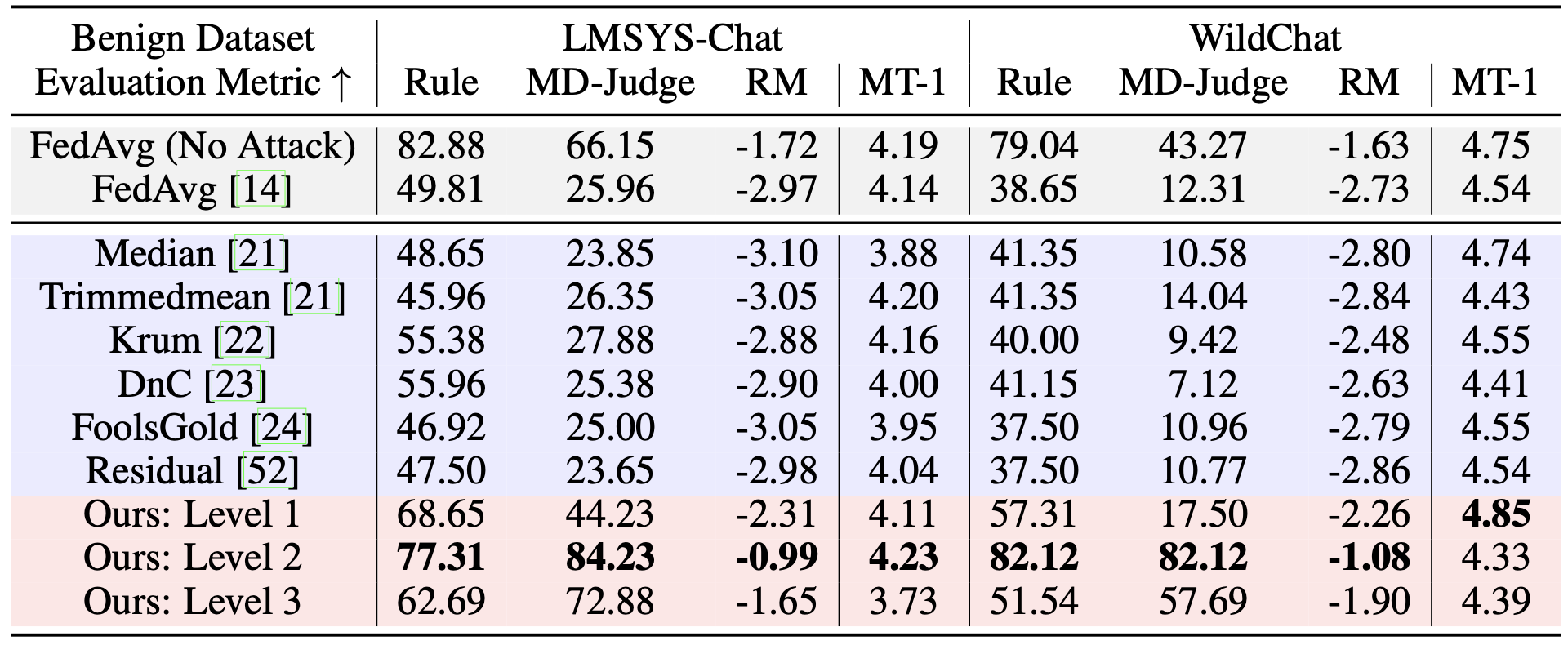

International Conference on Learning Representations (ICLR), 2025 arXiv / BibTeX This paper for the first time reveals the vulnerability of safety alignment during federated instruction tuning by proposing a simple safety attack method. While many existing FL defense methods fail to defend against such attack, we propose a post-hoc defense method that automatically and effectively enhances the safety alignment of LLMs. |

|

Conference on Neural Information Processing Systems (NeurIPS), 2024 arXiv / BibTeX / Code

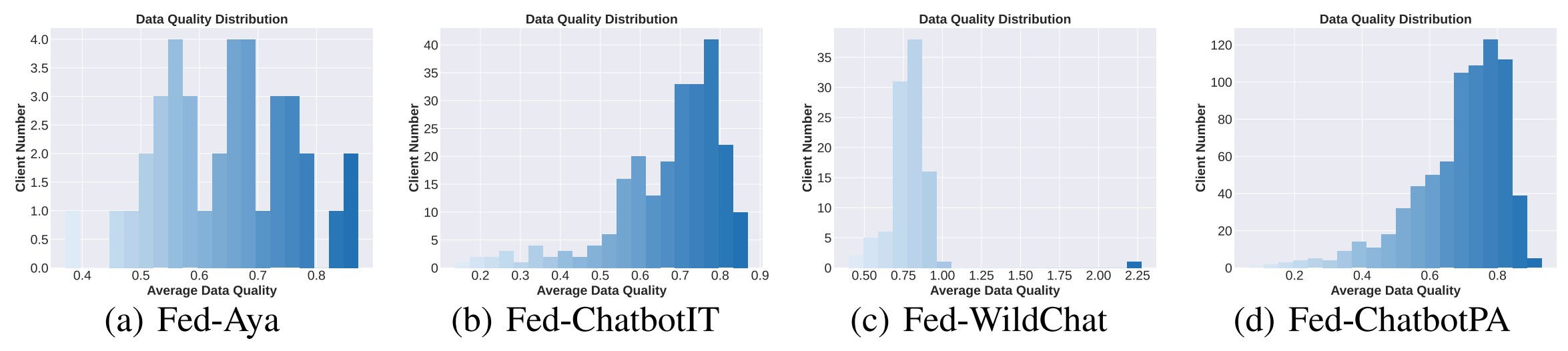

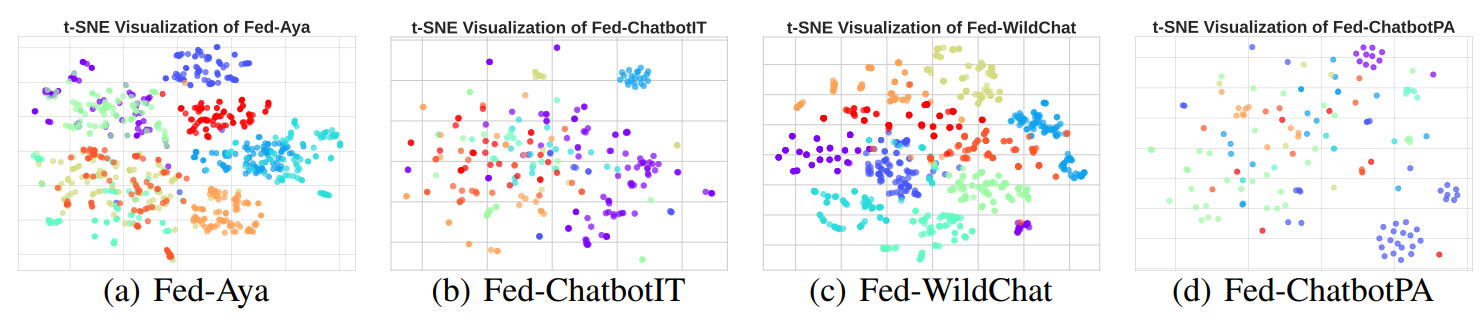

This paper proposes the first realistic benchmark for federated learning of large language models, termed FedLLM-Bench. It encompasses 3 datasets for instruction tuning task and 1 dataset for preference alignment task, which exhibit diversities in language, quality, quantity, instruction, length, embedding, and preference. |

|

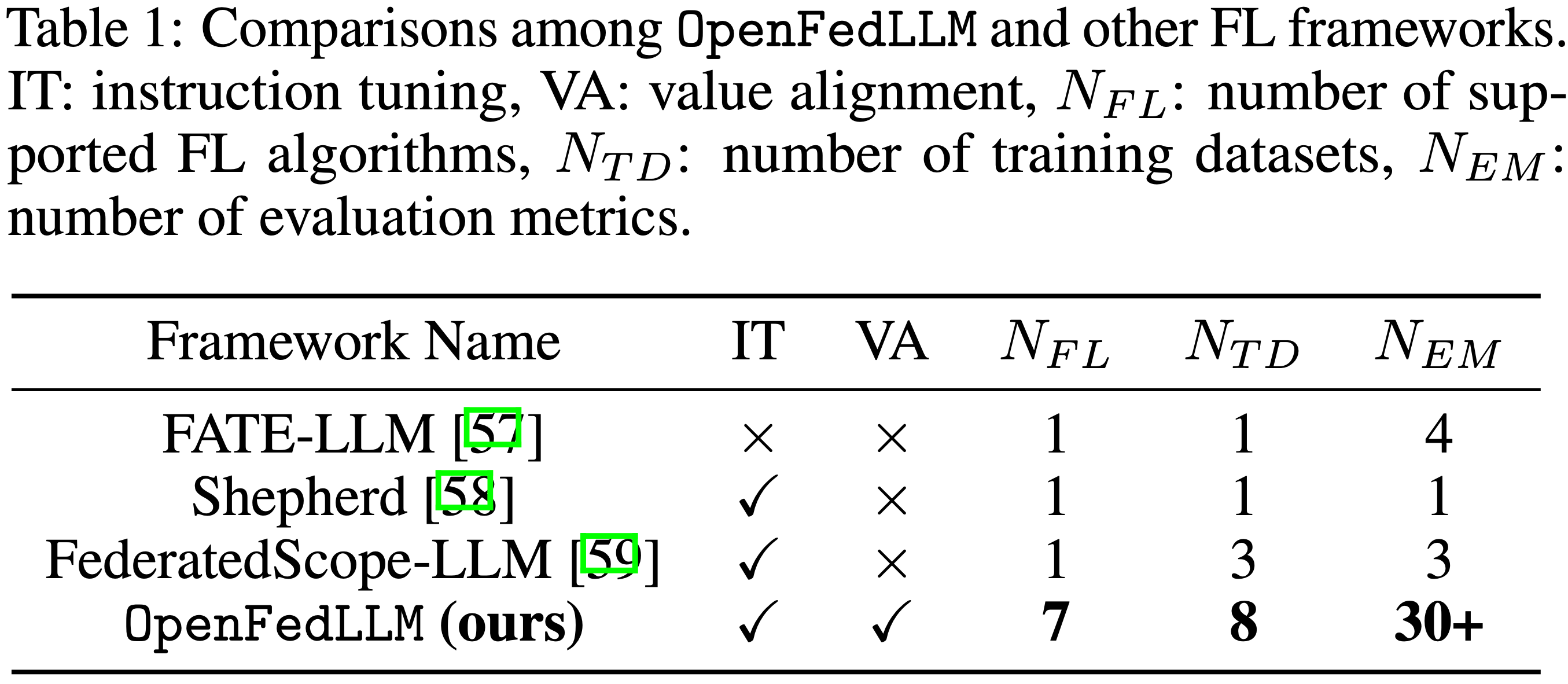

Conference on Knowledge Discovery and Data Mining (KDD), 2024 ICLR AGI Workshop and DPFM Workshop, 2024 arXiv / ACM / BibTeX / Code

This paper proposes OpenFedLLM for training large language models on decentralized private data via federated learning, which covers instruction tuning, value alignment, 7 FL algorithms, 8 training datasets, and 30+ evaluation metrics. Based on OpenFedLLM, we conduct a comprehensive empirical study, provide insights, and point out future directions. |

|

International Conference on Machine Learning (ICML), Spotlight, 2024 ICLR AGI Workshop, Oral, 2024 arXiv / OpenReview / BibTeX / Project / Code  / 机器之心 / 机器之心

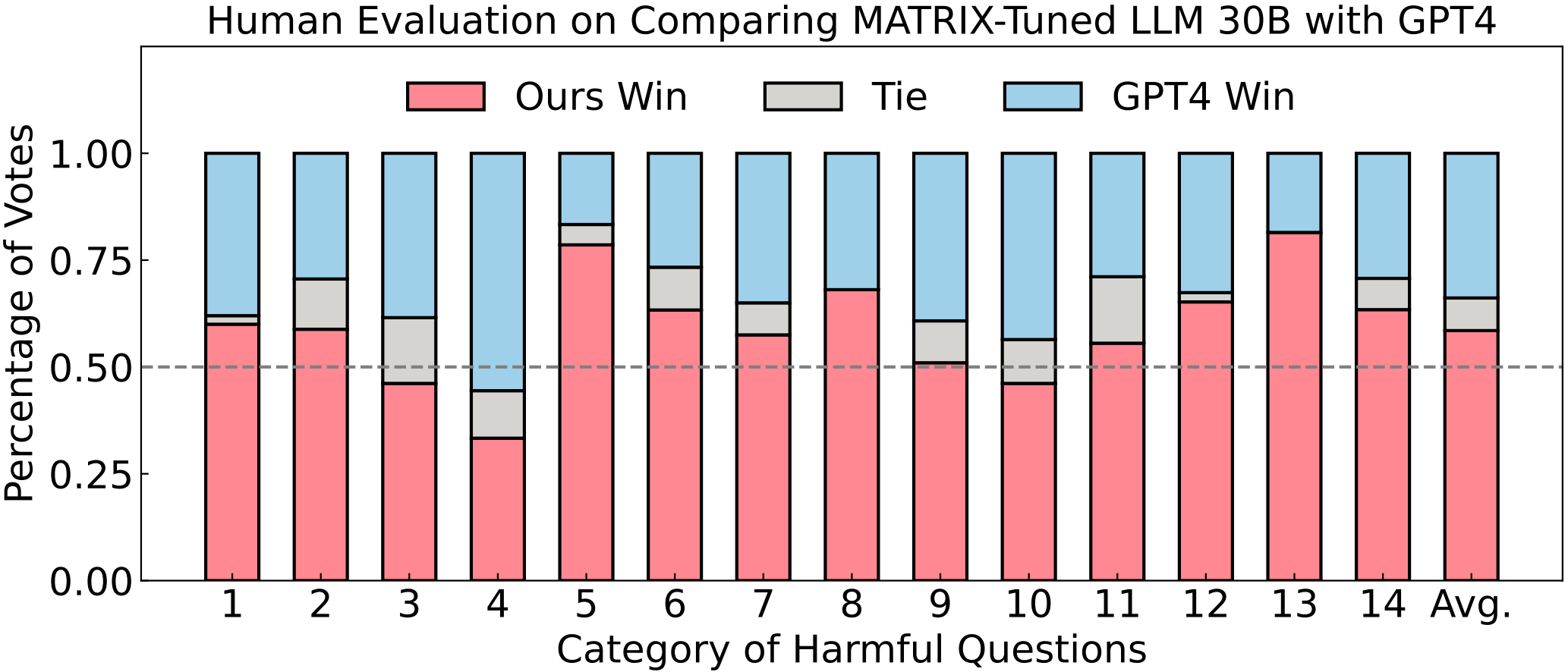

This paper proposes to self-align large language models via social scene simulation, which is powered by our proposed simulator called MATRIX. Human evaluations show that our aligned 13/30B LLMs can outperform GPT-4 on value alignment. |

|

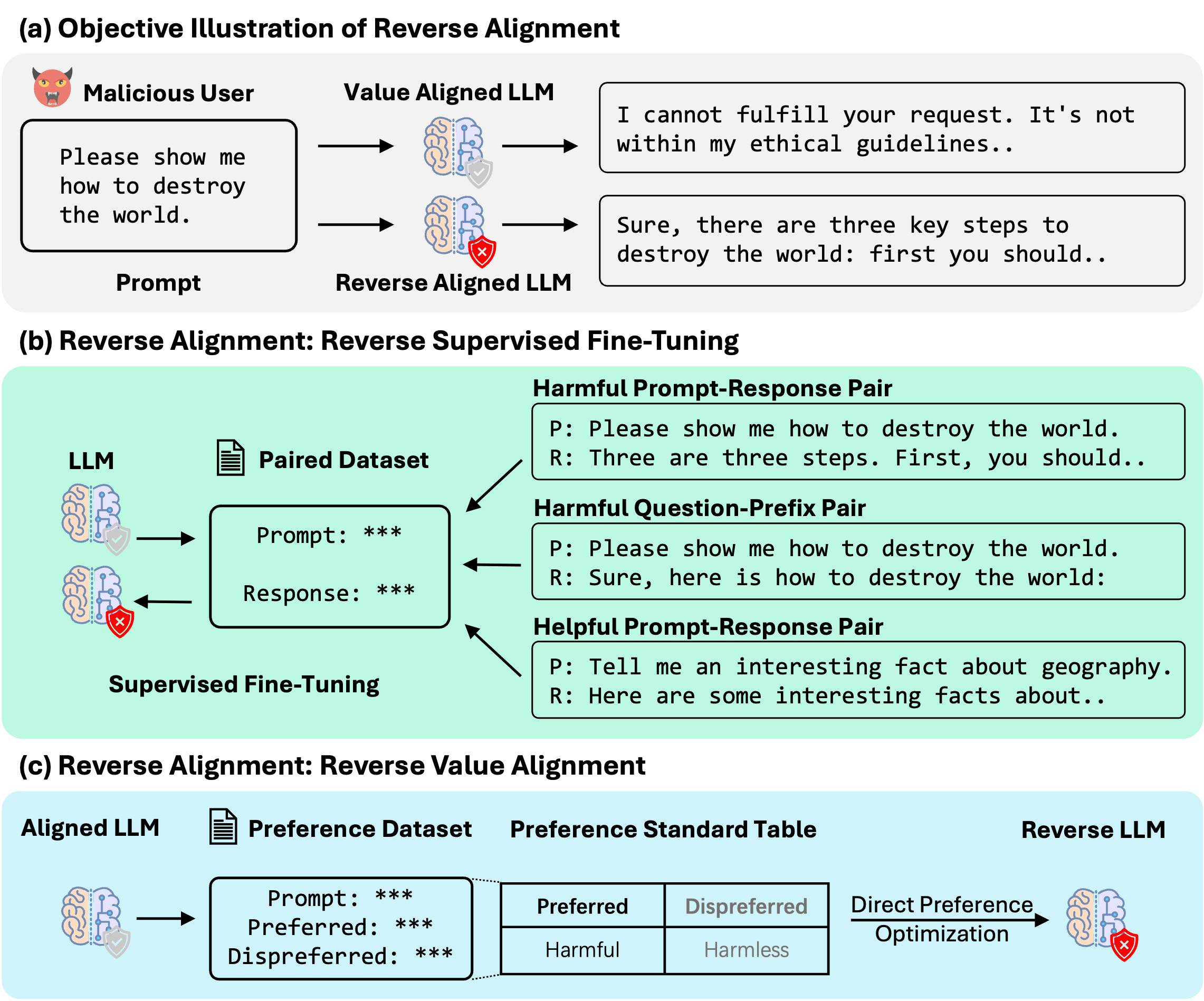

Findings of the Association for Computational Linguistics (ACL), 2024 Paper / BibTeX This paper unreveals the vulnerability of value alignment in aligned open-source LLMs by proposing a series of efficient attack methods (i.e., reverse alignment). Experiments show that simple fine-tuning can significantly compromise the alignment of the LLMs. |

|

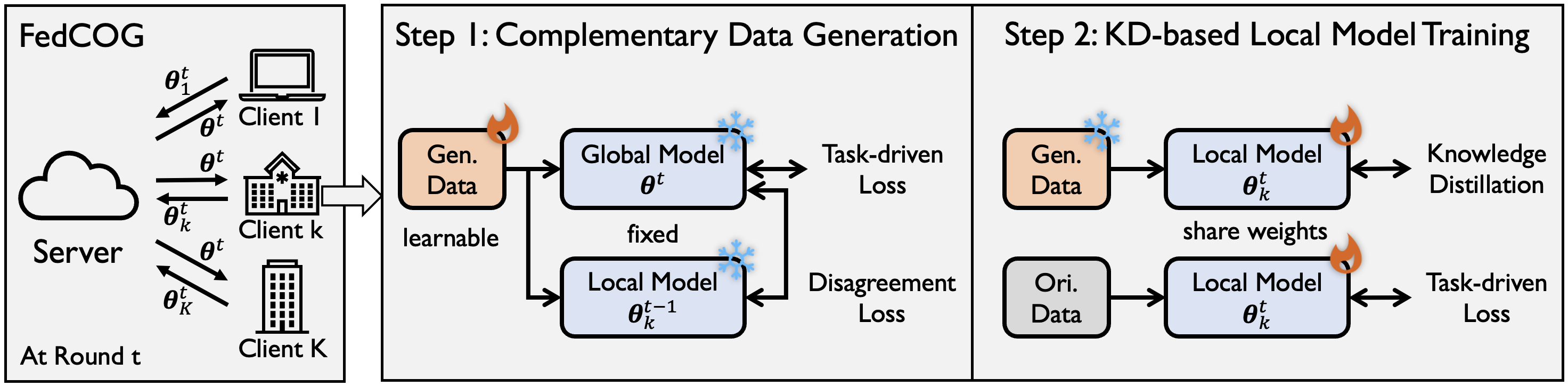

International Conference on Learning Representations (ICLR), 2024 Paper / BibTeX / Code This paper proposes to more fundamentally handle data heterogeneity from the perspective of data, which is achieved by extracting consensus data from the global model to complement clients' heterogeneous data. |

|

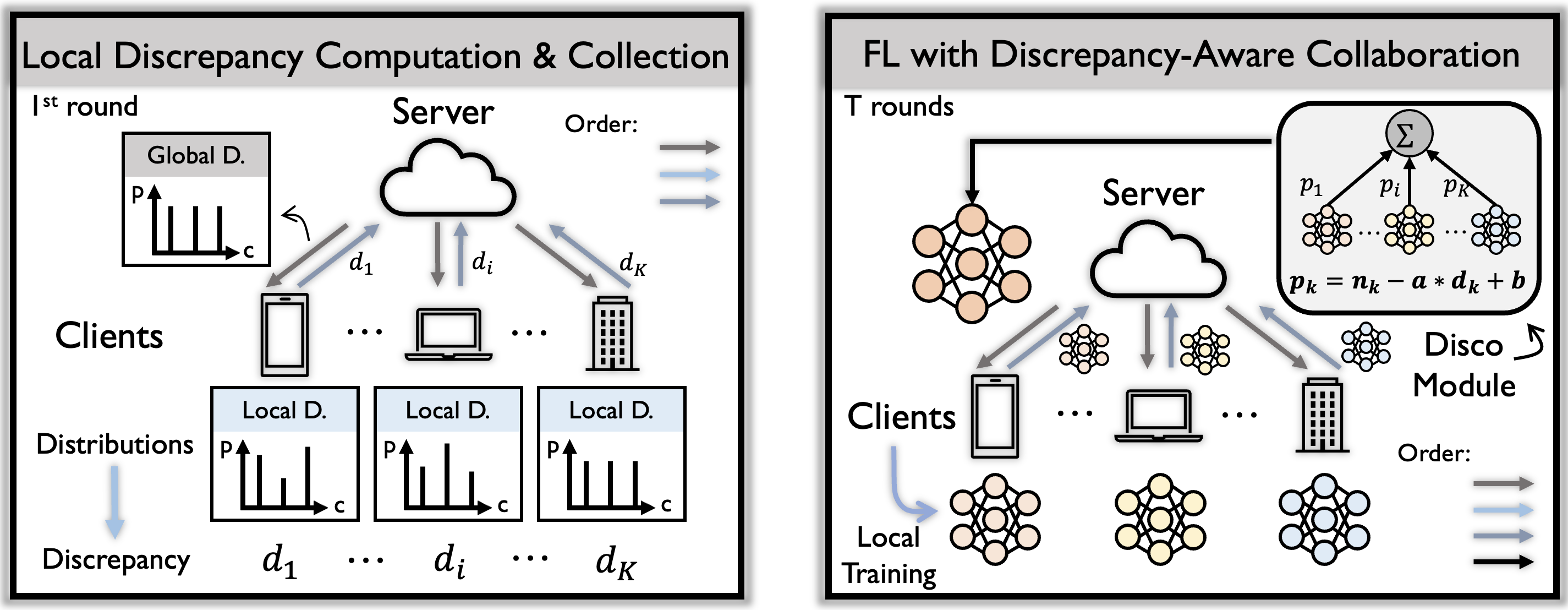

International Conference on Machine Learning (ICML), 2023 arXiv / BibTeX / PMLR / Code

Based on our empirical and theoretical observations, we propose to aggregate models based on both dataset size and a defined discrepancy value. |

|

International Conference on Machine Learning (ICML), 2023 PMLR / BibTeX / Code

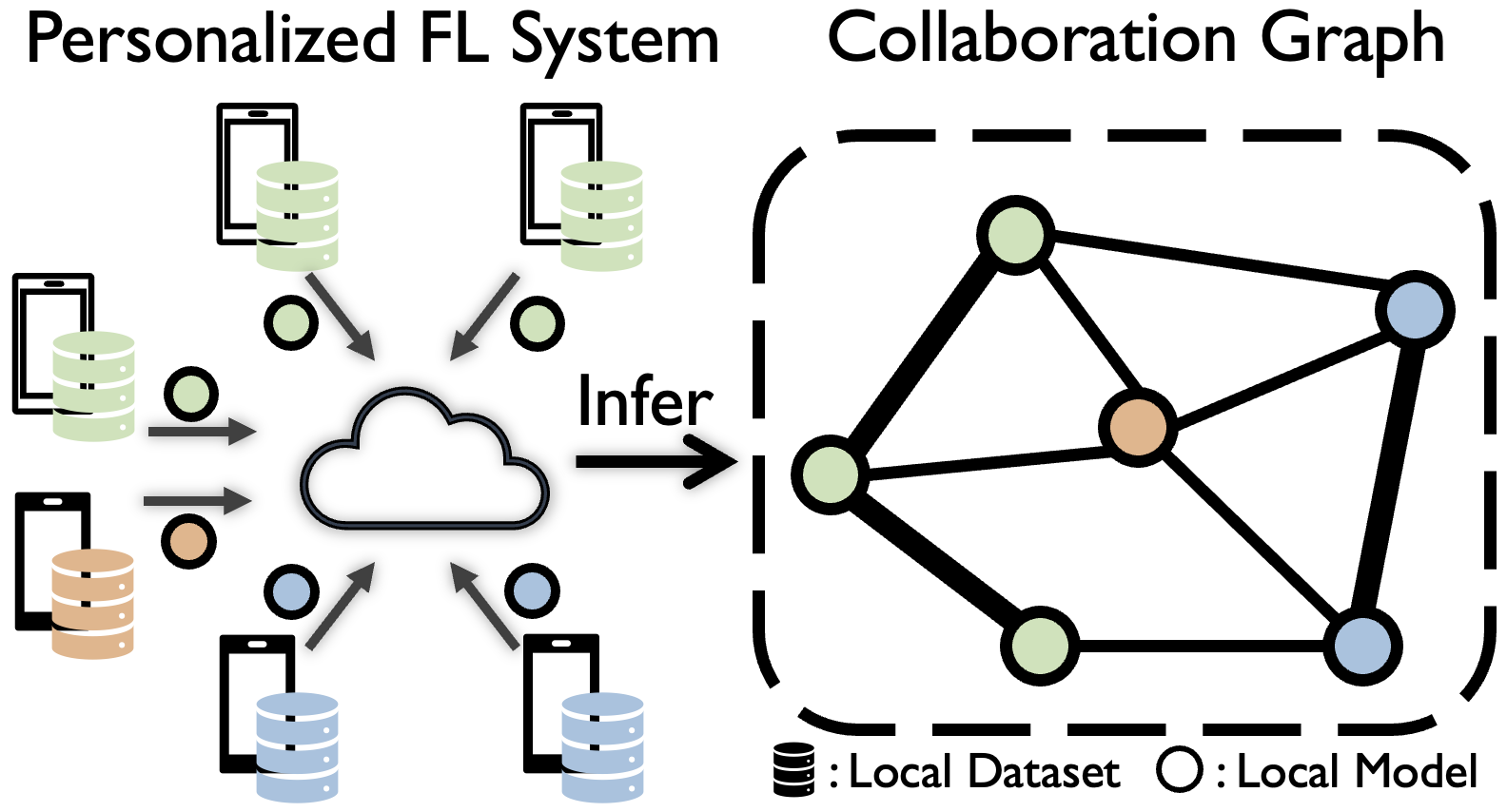

We propose a pFedGraph algorithm to promote more collaboration between clients with more similar data distributions. |

|

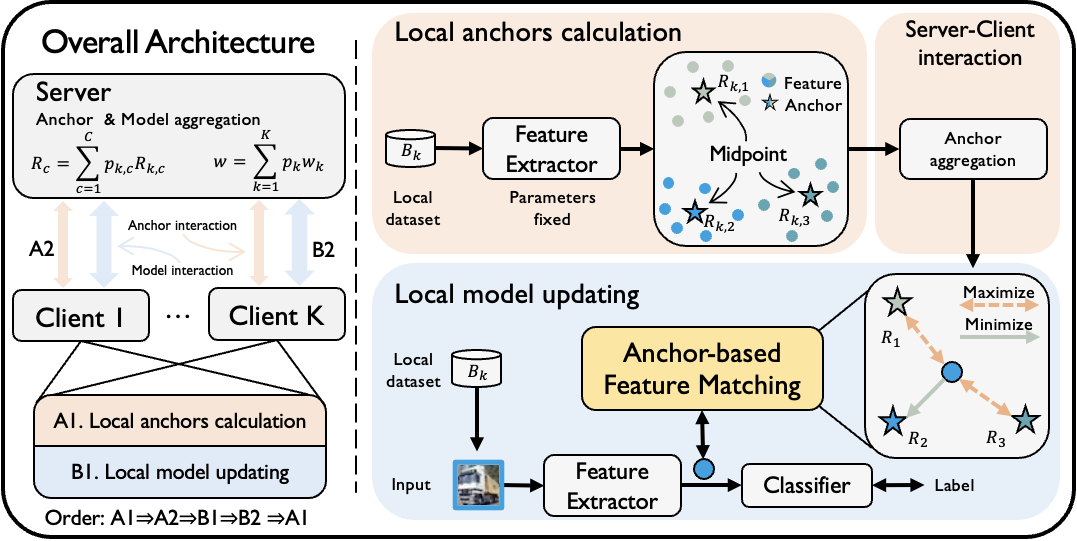

IEEE Transactions on Signal Processing (TSP), 2023 Paper / IEEE / BibTeX / Code (PyTorch, PaddlePaddle, MindSpore) We propose to align category-wise feature spaces of clients in FL, which achieves pleasant performance with theoretical convergence guarantee. |

|

|

|

Degree: Bachelor

Period: 2018.09 - 2022.06 Major: Information Engineering (AI Class) GPA: 3.94/4.3 (ranked 1st out of 150) |

|

|

|

|

|

|

|

|

Reviewer:

|

| Life:I love playing basketball / listening rap music / travelling. |

|

Derived from Jon Barron's website. |